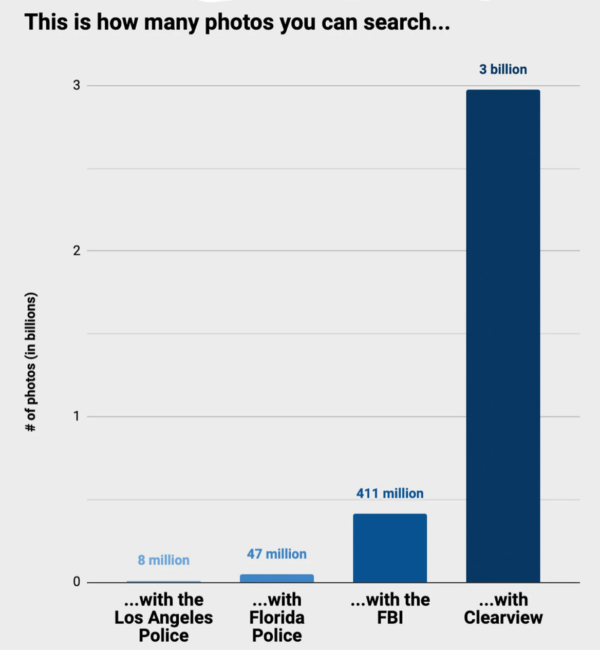

In a BuzzFeed expose in February 2020, it was revealed that the controversial facial recognition startup, Clearview AI, had thousands of government entities and private businesses around the world listed as clients. Clearview boasted a database of billions of photos scraped from social media and the web, and many of the company’s clients included law enforcement agencies around the world, including (allegedly) the Netherlands. To find out a bit more about facial recognition technologies and policing in the Netherlands, I interviewed Naomi Appelman and Jair Schalkwijk. Naomi is in the process of developing a project with Hans de Zwart, focused on racist technologies, and it is through this project that the collaboration between her and Jair has taken shape. Naomi also works at the Institute for Information Law, doing her PhD research under the broad umbrella of automated decision making, with a focus on online speech regulation and putting more control in user’s hands as opposed to the large tech companies. Jair, together with Dionne Abdoelhafiezkhan, is one of the driving forces behind Controle Alt Delete, an initiative for fair and effective policing, against racial profiling, and against police brutality. Controle Alt Delete helps people with filing complaints or criminal reports, as well as requesting footage from police about their arrest. They also advise the police on what policies or guidelines to implement, and lobby with politicians in parliament or local city councils for different laws and regulations. In 2020, Controle Alt Delete has assisted around 200 people that have come to them with complaints about the police, particularly in relation to racial profiling. The organization also engages in research, looking at sources that are available online but reassembling them in a new way to show problems in a different light.

Historically, the fingerprint has been used as the main way of identifying a potential criminal suspect—but now it’s the face which has increasing identifying power. We usually know when someone has taken our fingerprints, but we rarely know when our faces are being scanned and documented in public and private space. In our interview we spoke about the lack of transparency in the use of facial recognition technologies by the Dutch police, highlighting the asymmetry of information, power, and access within these relations.

* This interview has been edited for clarity.

Margarita Osipian (MO): Controle Alt Delete is focused on ending racial profiling and through your advocacy and lobbying work you have succeeded in including racial profiling in the public debate around racism, having it be acknowledged by the highest police chief and included in the policy of the national police, amongst others. The examples on your website statement seem to focus on direct interactions between police and the public. How have you seen ethnic profiling shift (or transfer) from these face-to-face interactions to digital systems within the police force?

Jair Schalkwijk (JS): I think in the end, there’s always a one-to-one, a civilian to police, or police to civilian, interaction. It always ends up that way. So for the civilian, it’s not always clear if there was a digital chain of reactions before the police went up to them. For example, when police drive behind someone’s car, they do a check in their system before they stop the car. But most civilians are not aware of that. What we also see is that the police work with CAS, a crime anticipation system, which is operational in nearly all of the Netherlands at the moment. They use it to decide where to send police units to, and at what time. And the input, which is given to CAS, later defines where, or helps the police decide where, to send units to. The input was entered in CAS by the decisions that police officers made before. Which is part of why it’s problematic, because the input isn’t neutral. The input is, amongst others, the result of racial profiling, but the output is later presented by CAS as neutral data. There has been a shift in the sense that there’s more digital stuff going on in the background. But for civilians, this is not noticeable.

MO: Your answer really highlights how we don’t think about where the chain ends in a way. So we often are looking at these digital technologies but we are not thinking through how they actually manifest in these one-to-one, police to civilian, interactions.

JS: It’s interesting, because the Dutch police did a pilot with CAS first, and then the Politie Academie, the Police Academy, reviewed the pilot and conducted research into it. And the research showed that working with CAS did not improve effective policing in the neighborhood that they did the pilot in. Even the project leader of CAS admits that “the functioning of CAS is not scientifically proven and difficult to measure”. But still, nonetheless, they decided on the basis of that research to implement CAS in all of the Netherlands. So it’s completely unclear if it actually helps the police in making better decisions. Whereas the risk of being drawn into the same neighborhood again, and again, and again, because that’s what the system tells you, is not so apparent (well, at least not to the police).

MO: A few years ago I went to a symposium at the KABK called Violent Patterns, put on by the Non Linear Narrative department, which focused on public security under algorithmic control. One of the speakers was a representative of the Dutch Police, and she outlined all the different digital tools that the police have at their disposal, including CAS. But it sounds to me like it’s not necessarily clear how much these tools are being implemented.

Naomi Appelman (NA): Yes, that’s correct. They’re not being transparent about how these systems are used and what their actual function is in day to day policing.

MO: Naomi, is there anything you might want to add to this in relation to your own research? Have you seen an increase in the use of these kinds of tools and technologies?

NA: I think you can definitely see an increase. It depends a bit on the timeline, of course, but in the past 20 years there has definitely been an increase in the use of these systems. But for me, the larger point is how intransparent it is: how the systems are used, when they are implemented, the size, and how they actually work. A lot of the information is just from public news and not official documents or publications. So that is more of a preliminary problem in the sense that you cannot really grasp what’s going on, because you just don’t really know. And that’s also what I wanted to say in relation to facial recognition and the Dutch police. The only sources we really have are the police website, where they communicate (but then that’s not an official source, it’s just the police putting something on their website), and loads of newspaper articles. And some discussions in Parliament, but not a lot. And that’s then everything you have in relation to knowledge about a certain technology. That’s just not enough to really get a sense of what’s going on, what the impact is, how the technologies are used, etc. So that to me is more the preliminary point, the lack of transparency.

JS: I agree. And you see that with other topics related to the police as well. So for example, in relation to racial profiling, the police don’t even collect data on racial profiling. Arrests are being registered by the police, but police stops that don’t lead to an arrest are only being registered if the police officer at that moment decides to make a registration. This lack of registrations is problematic because as a result the police don’t have any insight in their work: are they performing fair and effective stops? But it is also problematic for critical organizations like ours who want to follow the police and ask the police to be transparent about what they do, so that we can hold them publicly accountable for their work. And not collecting any data, not being transparent about what you’re doing, this is problematic for that reason.

MO: Do you think this is because the police don’t have enough resources? Or is it another kind of strategy or underlying issue? What is their reasoning for not tracking their own movements or keeping data about their interactions to get a sense of how present the police might be in certain neighborhoods?

JS: The police definitely have the resources to do this. They’re doing all kinds of work. They have plenty of money, they have the brains, and they have the resources. So they can definitely do it. But they are just very reluctant to be transparent: perhaps they don’t want to be held accountable. Not by civilians, but not even by the Ministry of Justice. So even the Ministry of Justice doesn’t have the relevant information to assess what the police are doing. And this is relating to racial profiling and relating to police violence. There’s just no data available to assess whether they’re doing their job in a just and effective way.

MO: I think you answered my question a bit earlier, but it seems like you’re looking into these kinds of technologies, but starting from the point of a police to civilian interaction. Is that correct?

JS: With all the work that we’re doing, we’re starting with the perspective of civilians, of people. So that’s always our starting point. I think the interaction with the police officer is then the second point, and then technology, but also the weapons for example that they have, comes after that. And then above there are the policies and laws regulating police behaviour and the means that the police have. With Controle Alt Delete we focus at all of these aspects: laws, policies, technologies and means of the police officer, and the person affected.

MO: In a July 2019 article on DutchNews.nl, it is stated that the Dutch police facial recognition database includes 1.3 million people and that the police also have access to a database containing photographs of refugees and undocumented migrants. In this article, Lotte Houwing from Bits of Freedom noted that the debate around facial recognition technologies is just starting. Over one year later, do you see any shifts in the public debate, or on the legal level, around facial recognition?

NA: We discussed this question together in preparation for this interview, and I think the conclusion is that there is not that much of a public debate. There are, of course, people talking about this and of course people are engaged, but not the broader public. It’s not really as alive as you might expect or perhaps want it to be.

JS: After the BuzzFeed article came out which mentioned that the Dutch police have been, or might be, using Clearview, the NOS also published an article about the topic. But that was only in the news for one day, or maybe two days. The BuzzFeed article states that Clearview is a problematic supplier because it works with pictures harvested from the internet. In April of this year, there was a debate in the Tweede Kamer, where a member of Parliament asked questions about the use of Clearview and the Minister of Justice said that he doesn’t know if governmental parties have used the Clearview facial recognition database or not. I think this is maybe the only recent example of a public debate. But also, this didn’t really stir a larger public debate.

NA: This comes back again to my earlier point about the lack of resources we have in discussing these new technologies. If you’re talking about more automated systems in general, there’s a lot that is being implemented at a municipal level. However, there are not a lot of documents or official sources to really track what’s going on. I think that’s the same with facial recognition. We should also remember that it’s very difficult to start the debate if you have very thin evidence of what’s going on. Because then your only references are a few newspaper articles every time and that’s just not the same body of evidence where you can really point and say “look, they’re doing this”. I think that’s a really important point.

MO: Yeah, if the police are not being transparent about the kinds of databases or tools they’re working with, and how that actually impacts their interactions with civilians, then you really have so little to work with.

JS: As I mentioned earlier, in the debate in the Tweede Kamer, the Minster of Justice responded to Parliament that he’s not aware that there’s any contractual relationship between Clearview and the Dutch governmental institutions. But it is possible that they might be working with an in-between supplier. It might also be interesting to investigate if there may be another party that’s acting as an in-between supplier in other countries that might also be acting as an in-between here.

MO: In relation to this facial recognition database that has at least 1.3 million people in it, the one that was in the DutchNews article that we spoke about earlier, I was wondering if you know how they collect those initial images? Is it only from people that have been arrested in the Netherlands? Or is bigger than that?

NA: There’s an article from 2016, when the Dutch police started with the facial recognition, and there they state that government databases such as passports and driver’s licenses are not part of that facial recognition system.

JS: It’s important to know that it makes a lot of difference how a photo is taken and where it is collected. So legally, it matters where it’s been collected—if it was on social media, with a body cam, a public camera, or in the police office. And the quality of the picture also matters a lot, obviously. The worse the quality of the picture the more failure there will be to identify someone with it.

MO: If I correctly understand our conversation so far, due to the lack of transparency and publicly available information, it is very difficult to get a clear sense of how the Dutch police are using these facial recognition technologies and how they’re using these databases in relation to other tools that they have, for example, the predictive policing technologies.

JS: That is correct. What we do know is that they are using these technologies. For example, this article in Trouw says that in 2018 they used facial recognition technology 1300 times. But it is not clear whether that was used in the police office itself, or if they used body cameras that are also able to do facial recognition, or whether it was done with cameras that are being installed on the highways. Due to the lack of transparency by the police and the Ministry of Justice, it is very difficult for us to get the full picture of the situation.

MO: It’s really jarring to see this lack of transparency and lack of broader public debate. I wanted to continue by linking to the situation in the US. There was a recent article on The Verge, about how the NYPD used facial recognition technologies to track down a BLM activist. And in many activist circles in the US it is highly discouraged to publish photographs of protestors as the images can be used against them. In the recent protests in the Netherlands I did not see requests for images of protests and protestors to not be published. Can you talk about why this difference might exist in relation to the situation in the Netherlands?

NA: I think in the Netherlands we can make a bit of a separation between more activist people who go to protests regularly and really fight for the cause and people who, once in a while, go to a demonstration when they identify with a specific topic. For the people in the inner circle, the police definitely keeps tabs on them and they know that. So posting pictures of your group is really not done. But the regular person that goes to a protest once a year or so, is not really thinking about facial recognition or posting photos of themselves.

JS: It’s also interesting to note that the police is very active in collecting photos themselves, like these images from a raid in Utrecht. So if there’s a demonstration, they generally send a police van with a camera mounted on top. That van is capturing still images and videos of people that possibly are involved in criminal behaviour, so that afterwards they can track the people and pursue them in court. They’re taking a lot of footage during such a demonstration. Whereas I think it’s obvious that they’re collecting images of people that, for example, throw rocks at the police, they must also collect a lot of footage of people that have not been throwing rocks at the police. So what do they do with that data? Do they keep that? Do they put it in a folder with activists or protesters? Do they try to link those images to names or not? In light of the police actively collecting footage, again we have the situation where we don’t know exactly what happens to the footage. They also send this camera van to demonstrations where there’s nobody throwing rocks at the police. And then what do they do with the images?

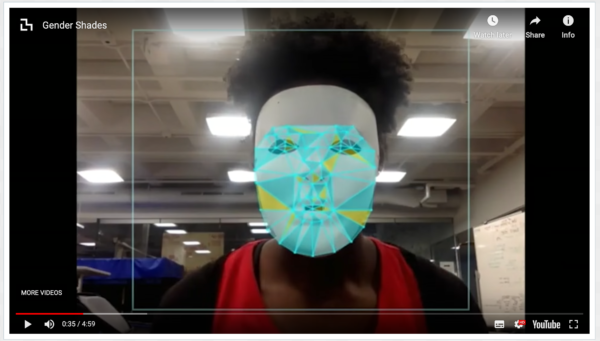

MO: I’d like to focus now on racial bias and ethnic profiling in facial recognition technologies. Work and research from the Algorithmic Justice League , amongst others, reveals the racial bias within facial recognition technologies. This could include the inability to recognise a face at all, misgendering, and misinterpretation of facial characteristics. An MIT study of three commercial gender-recognition systems found they had error rates of up to 34% for dark-skinned women—a rate nearly 49 times that for white men. In the context of the Netherlands what could this kind of bias result in in relation to ethnic profiling?

JS: We would be talking about the situation in which the police would be using facial recognition technology in order to identify people on the street that are suspected of having committed a crime. In that case, we could definitely have this kind of bias in the Netherlands as well. As far as we know, the police don’t do that. The situation is different when someone is already arrested and is being held in the police station, and then they decide to find out who this person is by doing a facial recognition scan or by checking the fingerprints. This is a different situation, because then you are being suspected of a crime and they try to analyse who you are. But the situation where we talk about racial profiling is where the police are making decisions on who to stop and who not to stop, and these are mostly proactive police stops. If this type of decision would be (partly) made on the basis of facial recognition software, that would be problematic.

MO: I wanted to end on a bit of a positive note with my last question. With the work we do at The Hmm, when we think about technology we don’t want to put some kind of moralistic value on technology itself. In Shoshana Zuboff’s book Surveillance Capitalism, she writes that “in a modern, capitalist society, technology was, is, and always will be an expression of the economic objectives that direct it into action” (16). I also read this as pointing to the need to separate the technology from the economic logic that activates it. In light of that, I was curious if you could imagine any positive uses for facial recognition technologies, as a way to prevent or deter ethnic profiling? For example, are people’s human biases potentially more unreliable than the biases of facial recognition technologies?

NA: In general, I don’t really agree with the idea of separating the technology from the context because I think of course if you just let your imagination run wild you’ll come up with a myriad of positive and great things that technology in general, and AI specifically, could do for society and policing. But I think that that’s the danger of ignoring the problem, because I think the problem is more the system in which such technology is used and how such technology can enhance already existing problems or create new ones. So on the one side, of course there are positive uses of AI that we can imagine within the context of policing, but you need to also adjust the system or the institution of policing in order for those positive effects to become possible. On the other hand, I also think that there are some types of technologies that even within well-functioning institutions, with sufficient safe guards, I would still say they’re not desirable. But that’s also a political view because, for instance, I wouldn’t want facial recognition in public spaces. Even if you have the proper institutional safeguards and it’s not being used for policing or within a changed form of policing, I still would be opposed to that.

JS: You can imagine a situation in which the technique would work flawlessly. For example, with DNA research. Everybody has unique DNA and we’re able to make a comparison between the DNA of someone who’s being suspected and DNA material found at the crime scene. if there’s a match you can arrest that person and question them and try to see if they were involved in the crime. And then the case would continue into the criminal justice system. So, a very good solution to help solve crime would be to collect DNA material of everybody in the Netherlands and then collect the material at every crime scene. Then you could do a check every month or every week, why not do it every day, to see which people were on the crime scene and might be involved. But we don’t want a society like that because everybody would be a suspect all the time. In addition, the risk of this kind of technology falling in the wrong hands would be tremendous. The same goes for facial recognition. If it would really work flawlessly, there would be so many advantages to it, because everybody would be known all the time. It could be cross-referenced to a system which has all criminal suspects in it. You would however be under constant surveillance by the police. And again, I don’t think this is the kind of society that we want, but it would definitely help in reducing crime. You ask, “are people’s human biases potentially more unreliable than the biases of facial recognition technologies?” I think this is a great question because we do see that human biases are very unreliable. Because people have biases, the decisions that they make are also biased, and they don’t always make good judgments. But that doesn’t necessarily mean that it’s better to build a facial recognition system, even if it would work flawlessly. Again, we need to ask ourselves, is this the kind of society that we want?