Besides her work as an actress—in the Harry Potter movies (2001-2011), Beauty and The Beast (2017), and recently Little Women (2019)—Emma Watson is a well known activist for gender equality. She uses her celebrity status to improve the position of women. You’ll probably remember the speech she gave during the United Nation’s HeForShe campaign in 2014, where she emphasised that men and women have equal rights and made a special call to men to also promote this idea. She remains committed to fighting for women’s rights. In 2019 Watson helped to launch a legal helpline for people who have suffered sexual harassment at work, and her statement about being ‘self partnered’, instead of being ‘single’, went viral. But it was also this same year that something happened which is contrary to the actress’s activist and independent persona. More and more deepfake porn videos starring Watson appeared. Emma Watson is among the top 5 celebrities that are used for deepfake porn. Based on all the available film scenes with the actress, her face can be morphed into every other film via deepfake technology. Why is Emma Watson so popular in deepfake porn? And what is the impact of deepfake porn on the position of women in general?

Deepfakes and porn have always been intertwined. Even the word deepfake is based on a Reddit user, named ‘deepfakes’, who shared deepfake porn video’s on the platform in 2017. Still, most deepfakes continue to be porn. And most deepfake porn uses the likeness of celebrities. Deepfakes have mainly been made by and for men, starring women without their consent. You only need a few computer skills to turn any actress into a porn actress. Despite the ease of creating these videos, there is still just a small collection of celebrities, besides Watson, that always return. In the West, Jessica Alba, Daisy Ridley, Margot Robbie and Gal Gadot are favourites but, as stated in Deeptrace’s report The State of deepfakes 2019, one quarter of all deepfake porn stars South Korean K-pop singers.

As an actress, or actually as a public figure in general, you know that people you’ve never met can have sexual fantasies about you. Usually, these fantasies stay hidden in these people’s minds. It must be shocking to see your face in a porn movie, without your consent and involvement. The advantage of being in the public eye is that it is usually obvious that you’ve had nothing to do with these films. It’s obvious that Emma Watson’s deepfakes are closer to a fantasy than to reality. Since everybody knows they’re fake, they probably won’t damage her image and reputation very much.

So why do the deepfakers choose Emma Watson? She is not especially noted for conspicuous sex appeal and she hardly ever wears sexy clothes in public. The one time she did a nearly topless photo shoot with Vanity Fair, she was immediately criticised. Nevertheless, a large group of men online adore her. Via r/EmmaWatsonBum, men discuss photos of her modest backside. Modest, if you’d compare it with today’s standard set by Kim Kardashian. These men appreciate Watson because they grew up with her character in the Harry Potter series. Over the span of these eight movies they’ve seen her becoming an adult, along with themselves. As teenagers, they’ve probably dreamed about her. Now they can make this fantasy tangible.

But deepfake porn is in fact harmful for a large group of women. Women who don’t have a large stage to disprove the false videos or who come from sexually repressive cultures. In the beginning of 2018, the Indian investigative journalist Rana Ayyub campaigned for justice for the rape and murder of an 8-year-old Kashmiri girl. This horrible crime was allegedly committed by a leader of the ruling nationalist Bharatiya Janata Party (BJP). The party supported and stood by the alleged rapist, prompting Ayyub to state on international media that India should be ashamed of protecting child rapists. In April that year, a deepfake porn featuring Ayyub was spread online. Because of the lack of women’s rights and sexual freedoms in India, the porn video was extremely damaging. It was obviously fake. The actress in the video had straight hair instead of curly and seemed much younger than Ayyub. Still, Ayyub was shocked to see her own face in the video and felt extremely embarrassed. She couldn’t face anyone in her family. She was flooded with messages and a day after the video’s release she went to the hospital with heart palpitations and anxiety. “You can call yourself a journalist, you can call yourself a feminist but in that moment, I just couldn’t see through the humiliation”, Ayyub wrote in a Huffington Post article. The deepfake was made by someone from the BJP. When the video was shared via their fan page, it escalated. Via WhatsApp, the video ended up on almost every phone in India. Ayyub was flooded with messages from people asking her for her sex rates. Eventually, international pressure from the United Nations was needed to force the Indian police to take action against the abuse. As a critical journalist Ayyub is regularly under fire, but this experience deeply impacted her: “From the day the video was published, I have not been the same person. I used to be very opinionated, now I’m much more cautious about what I post online. I’ve self-censored quite a bit out of necessity.” Ayyub’s story falls inline with many cases where women’s bodies and their sexuality are used against them, especially women who criticise certain political systems. It shows that deepfakes can be a powerful weapon for this.

In 2019, the number of deepfakes doubled from the year before. The machine learning and artificial intelligence techniques with which deepfakes are made, improve when you ‘train’ them with more material. So the explosive growth of deepfakes ensures that the videos are becoming more real and the software becomes more accessible. The easier it gets to make deepfakes, the more videos will be available for a smaller and more specific audience. Not starring celebrities, but individuals like you and me. VICE journalist Evan Jacoby only paid $30 to make a deepfake with himself as the lead. Apparently, there are face-swapping marketplaces where, armed with just a 15-second video of a person, you can ask a deepfake creator to morph that person’s face into any other video. Jacoby interviewed numerous deepfake creators for the article. One creator wouldn’t mind putting a random girl into a deepfake porn, as long as he gets paid and the girl is not too young. Another creator was only committed to making humorous deepfakes. Neither felt responsible for the consequences. According to these creators, people are far too relaxed about posting images and videos of themselves on social media platforms. In their opinion, whatever is shared in public is free to use for any end goal.

In our digitally saturated environment, teenagers who don’t have a 15-second video of themselves online are rare. Awareness about what can happen with these images has been insufficient. When chat programs and webcams found their way onto personal computers in people’s homes, young people (often girls) were sharing images and live feeds of (parts of) their naked body to their chat partners, whom they thought they could trust. When these images were spread all over school, it had disastrous consequences for these teens. Deepfakes take this exploitation and invasion of sexual privacy even further. The girls don’t even have to be involved in the process and lose all control over their image—while still suffering the same impact and embarrassment.

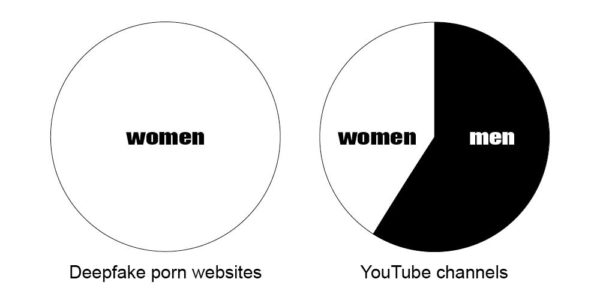

During his talks with deepfake creators, Jacoby also found out that deepfake porn videos with images of ex-girlfriends are frequently requested. These deepfakes wouldn’t be interesting for a general online audience, but would mainly be for personal use. So what is the main difference between having a sexual fantasy involving your ex or making a deepfake from this fantasy? Carl Öhman, PhD candidate in the Digital Ethics Lab, discovered that if you look at it from a more conceptual perspective there is only a small difference between fantasising about an ex and making a deepfake with them in it. Suppose your ex makes a deepfake porn starring you, and it was technically possible to ensure that you would never see the deepfake and that your ex could not share it with others. Then there’s hardly any difference between the creation of the deepfake and the creation of the fantasy. Öhman says that the real problem is that the consumption of deepfakes is an undeniably gendered phenomenon. Both men and women have sexual fantasies and that these fantasies include both men and women. But, so far, deepfake porn videos are only made by men starring women. As a result of this inbalance, the deepfake technology does play a role in the social degradation of the position of women.

In non-pornographic deepfake videos there are even more men than women in the lead role—illuminating the deeply gendered nature of deepfake porn. Deeptrace reports that deepfake porn exclusively targets and harms women, (see image above), with male deepfake makers treating women like puppets. Without permission, they place women in all sorts of positions and situations only for their own gain: to make their own sexual fantasies come true or to intentionally harm and slander them. This abuse of power is not just confined to the women whose faces are pasted onto the bodies of porn actresses. The porn actresses from the original videos also have not chosen for their body to be deconstructed, attached to a celebrity face, and spread online to be viewed by so many people. Nor did they agree to be part of the harassment of other women. No less than 96% of all deepfakes are porn and all deepfake porn videos are undermining the position of women. The fact that this new technology is particularly used to devalue and objectify women’s bodies shows that we still have a long way to go to achieve gender equality, what Watson is campaigning for. Instead of a threat to the truth, this is actually the real threat of deepfakes.