On 17 June 2020 we organised The Hmm ON deepfakes, an event on our self-built livestream platform live.thehmm.nl to talk further about deepfakes. With the event we wanted to find out whether deepfakes are the newest weapon for spreading fake news, or just harmless fun. We’ve invited three speakers to elaborate on this from three different perspectives.

Our first speaker was Giorgio Patrini, co-founder and CEO of Deeptrace, an organisation that is researching and mapping the deepfake landscape. Besides that, they’re working on a detection solution that guarantees the integrity of visual media. Giorgio learned us that:

- The number of deepfakes is growing explosively. In the first half of 2020, 52,000 new deepfakes were uploaded to the internet.

- Most deep fakes that are made are pornographic deep fakes.

- The human eye is very bad at recognising deepfakes. Our answer is only 8% better than a random guess.

- Using deepfake technologies is accessible to everyone through open source software that is freely available online.

- If you are not tech-savvy enough to create one yourself, there are several blogs where you can ‘order’ a custom made deepfake video.

- Deepfakes are used for misinformation and social media fraud, but Giorgio is most concerned about deepfakes that attack (the reputation of) individuals or brands. He sees this happening more and more.

According to Giorgio we should stop the spread of deepfakes as soon as possible. The detection tool Deeptrace is working on is based on data driven deep learning technology. But the organisation also keeps an eye on bad deepfake actors and try to find out what their incentives are.

Sociologist and researcher on conspiracy theories Jaron Harambam thinks we shouldn’t be so worried about deepfakes. With his presentation he unpacked the moral panic surrounding the technology by looking back through history. Jaron showed us that:

- Every new technology is first seen as a threat.

- When television just came out, there was the same fear that it would affect our concept of truth. Some people were convinced that even the name, tell-a-vision, shows that the medium would be used for manipulation.

- It is important to put the videos in context. A deepfaked Obama saying “Trump is a total and complete dipshit” is clearly fake because we got to know Obama from several media appearances and we know he would never say something like this.

Jaron thinks that our relation to truth, fake and moving images are changing. Just like Photoshop did for still images, deepfake technologies prevent us from always trusting moving images.

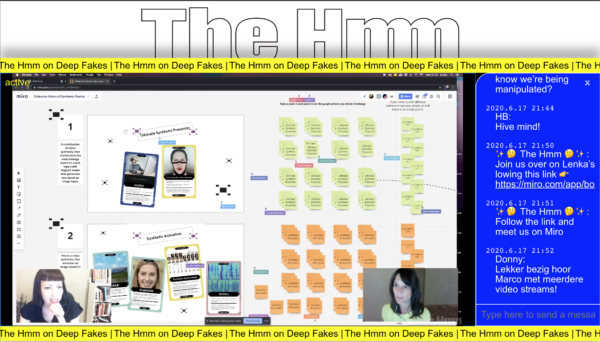

At last, independent research designer and visual speculator Lenka Hamosova introduced us to the magic of deepfakes. According to Lenka, what we understand under the term of deepfakes is just the tip of the iceberg. She prefers to call the technology ‘synthetic media’, since the generated AI media can do much more than just face swaps. Via her Synthetic media brainstorming cards she showed the endless possibilities of creating synthetic realities and challenged us to come up with scenarios ourselves. With the StyleGAN technology you can create fake persons, fake cats or fake living rooms. GauGAN generates photorealistic scenarios based on just a simple drawing. What if you combine those two techniques?

Lenka thinks that:

- Deepfakes will cause a death to stock photos.

- Synthetic media will soon be integrated into our daily lives. Online fashion stores can adjust the gender, body shape and skin tone of the models that present their clothing via AI, so they no longer have to hire a photographer. And if you look up a cake recipe on your phone, it’s not such a crazy idea that an ad pops up in which your mom recommends the right brand of butter to use.

- Instead of being scared, we should embrace the creative potential of deepfakes.

As a deepfake (or synthetic media) creator herself, she has experienced that knowing more devolves the fear of the technology. It is important to understand how AI models work and to think about what kind of future we want. That’s why she invited us for an interactive thinking session on the ethical side of some scenarios she described. Is it a good or a bad development? Will it impact us on a large scale or a small skill? It’s important to look at these things and discuss it together.

Most of our visitors were not concerned that deepfakes would affect the 2020 US elections, but were convinced that we need a tool to detect deepfakes. We may not have to worry that the technology is threatening our democracy, but we should not underestimate the danger. Personal blackmailing can also be very damaging, as we also show in our article If deepfakes are a threat, this is it. It’s important to be aware of the most influential AI models that are used for generating synthetic media. And todiscuss AI ethics together. This is what we did during The Hmm ON deepfakes.